Their creator has disavowed them. People cannot agree on what a story point even represents. The measure is different for every team that uses it. They sow confusion, create conflict, unreliable timelines, are easily gamed, demotivate and degrade the performance of your team.

For everyone involved, this is a waste of time. Let's deep dive into why Story Points are so broken and how to avoid dealing with them ever again.

// Share.on([hacker news,

linkedin,

twitter,

facebook,

reddit])

Discuss on Hacker News.

You knew it from the first moment somebody tried to explain story points to you. Something in the back of your mind said, "Okay...but what if...?" a lot. You were reassured. Everybody on the team was using it. All of the other teams were too. Eventually, this would make sense. You probably just don't understand it well enough yet. Somebody on some team somewhere has been using this successfully for years!

Turns out, you were right all along.

But story points came from a reasonable place. Estimates are needed to project costs and timelines, but estimates are also difficult when there are a lot of unknowns. It is perfectly reasonable for the business side of your company to want that information...but this approach simply doesn't work. It can't...by design.

So let's first explore what story points are supposed to be, how they went sideways and then look at a better way to invest your team's time in useful activities that also give the business what they need. This is a long journey and before we start explaining how to fix the problem, we first have to thoroughly understand what the problem is in the first place. I thought about breaking this into two separate posts, but both work better in a shared context.

This is a long and detailed piece, so here are the highlights. Read on for the details.

According to Atlassian...

“Story points are units of measure for expressing an estimate of the overall effort required to fully implement a product backlog item or any other piece of work. Teams assign story points relative to work complexity, the amount of work, and risk or uncertainty.”

We've only just read the definition and we already have multiple competing ideas.

We've introduced confusion from the start. Whenever you see a story point value, does it typically include a breakdown of what it represents on each ticket? I've seen it attempted once but never consistently applied.

In practice, Story Points are typically explained as a relative measure of complexity. Complexity is a big enough word that it can encompass a lot of details.

I've previously explained it on this blog using a Rubik's Cube. A team can theoretically agree on the complexity of the task even though the time to solve it may vary wildly. Some people may know how to solve it in about 5 minutes. Others may be unable to solve it at all after weeks. But the team can agree that it's a complex problem and give it a point rating relative to their other work.

If another story has been agreed upon to have a complexity rating of 1 then by comparison, this story is a...5 maybe? I guess?

And this is where the critical, big word comes into play: relative. It is entirely possible for two different teams to rate the exact same item with wildly different point ratings. One team could consider the rating to be a 5 while another could consider it to be a 20. If you embrace that these complexity measures are relative for each team, this is fine.

So if we know that measures are relative and will differ wildly from team to team, what should we do?

Correct! We should compare teams based on how many points they complete!

That of course, would be silly. In practice, this happens all the time. It's any easy trap.

For example, "How did that team rate story X as an 8!? That's a 2 at best! This team has something much more complicated and they only rated it a 5!"

This is a hypothetical exchange that I have heard variations of many times. These relative measures for each team are used to compare teams against each other at the management level. It isn't supposed to happen that way but it does because people outside of the teams will inevitably begin looking at these Story Points.

Story points work on a Fibonacci scale, so 1, 2, 3, 5, 8, 13, 21 (or 20 for Scrum), etc. They get wider at the top in order to represent variability, so while a 1 may be a very precise representation, a 13 is really a range of about 9-19. This makes sense when it is explained.

If we know a 1 is a 1 but a 13 is a less precise range, what should we do?

Correct! We add them all up and pretend that the sum accurately reflects something meaningful!

That of course, would be silly. Let’s talk about Velocity.

According to Atlassian...

"4. Sum points to find velocity. Next, you need to total the story points for all the completed user stories. The sum of the story points represents the sprint velocity."

This is the moment where Story Points truly go sideways. They are represented by numbers and naturally, we want to do something with those numbers. Unfortunately, you can't add 1 + a range of 9 to 19. In this context, twenty six 1 point stories are very different than two 13 point stories. The former has many small tasks with almost no uncertainty. The latter has two very large tasks with a great deal of uncertainty.

“When a measure becomes a target, it ceases to be a good measure.” - Goodhart's Law

When we simply add these numbers up expecting the average over the last couple of weeks to represent the future, we are setting ourselves up for a roller coaster. Teams will be compared based on their velocities. We've already talked about how each team will measure differently, but it is virtually impossible for somebody in management to hear a report saying "Team X completed 50 points this sprint, Team Y completed 20 points this sprint..." and not come away with the impression that Team X is performing better than Team Y. Sometimes you will even see this lead to arbitrary points targets of "every team should be completing 50 points per sprint!"

“...Starting today, all 1-point stories will be called 2-point stories! Poof! I've doubled my velocity! Problem solved! …” - Allen Holub @allenholub

Points are a relative measure. Teams will measure the same things differently. And people have to be constantly reminded of that, which is a problem in and of itself. In order to account for this, many teams have attempted to convert to non-numeric estimates such as T-shirt sizing like S, M, L, XL, XXL. This is a defense mechanism against problems that are already happening.

Worse still, the default function of Velocity is to convert points to time as "Average Points per Sprint", which typically breaks down to X points / 2 weeks. It's built right into the formula.

Points are not time! That was not one of our many potential competing definitions given above, but by insisting on the use of Velocity we defacto convert points into the one thing they are not in any way meant to represent.

So knowing that points are not time and are highly variable, what should we do next?

Correct! We should set deadlines! And tell our customers about them!

That of course, would be silly. And yet, it happens all the time creating unnecessary embarrassment for the entire organization when those deadlines are inevitably missed.

These are all known and well established problems that lead to turmoil throughout the industry. You'll see discussions about terrible Agile, Scrum, SAFe, etc experiences from developer forums frequently. In my opinion, they all have the same root cause...Story Points and the confusion that is naturally built into them.

The creator of story points, Ron Jeffries, has since disavowed them.

"I like to say that I may have invented story points, and if I did, I’m sorry now. Let’s explore my current thinking on story points. At least one of us is interested in what I think." ... "I certainly deplore their misuse; I think using them to predict “when we’ll be done” is at best a weak idea; I think tracking how actuals compare with estimates is at best wasteful; I think comparing teams on quality of estimates or velocity is harmful." - Ron Jefferies, Story Points Revisited

Scrum tried to fix this in 2011 when “Commitment” was changed to “Forecast” in an effort to at least get the language to better convey the goal of the process, but numerous Scrum teams still use "Sprint Commitment" today, creating relentless arbitrary deadlines, scrambles and technical debt every two weeks as a side effect. The moment this starts happening, teams will be forced to aim low or pad estimates in order to avoid missing their "commitment."

Worse still, teams accomplishing more than the estimated work will often be accosted for their bad estimates rather than praised for exceptional performance...and yet this is the only way to increase Velocity. That's math. If you are scrutinized for accomplishing more than the average, the average cannot increase. This pattern will psychologically put your entire organization in a race to the bottom. Everyone is better off when we are encouraged to aim high.

People asking for estimates care about time, so points always get converted to some form of time / projection. Whether you see it or it's happening behind the scenes.

And this is reasonable. I know, stay with me.

The business needs to make decisions about how to get the best results with the resources they have available: Time, Money, Tools and People. They make those decisions by prioritizing work to get the best Return on Investment. Feedback from customers, sales, product research and more is used to try to assign an expected value to upcoming work that is being considered. Then that value has to be considered against the projected cost of completing that work.

Return on Investment = Net Return / Cost of Investment

Your development estimates make up the Cost of Investment in that formula, while the Net Return will be represented by a combination of market research, customer feedback and institutional knowledge.

Many projects with lower costs may result in a greater return for the business than a very high value project with comparatively high costs. Often that is the case because bigger projects typically have high variability and a higher likelihood of cost overruns, as well as typically longer feedback cycles. This is something that story points tries to consider with the Fibonacci sequence that we discussed earlier. You're assuming higher variability with the higher numbers that represent a range. In isolation, this approach actually works well for valuation when it's difficult to generate real numbers for the Net Return.

The business needs your team estimates in order to project the potential cost of different initiatives to help them prioritize.

So, let's get better at estimating! Software Estimation: Demystifying the Black Art is an excellent book to help. It does a fantastic job of breaking down the various challenges and realities involved in the estimation process and will teach you valuable techniques such as...

This is all good advice and true. It's an excellent book. The problem is that it also shows just how hard estimating really is, especially if there's no similar past work for comparison. When you're providing multiple estimates and adjusting as things progress, the estimates are not firm because ultimately they are truly a best guess. This is reflects the reality, but it doesn't help the business with the time and cost projections that they really wanted.

Estimates are hard. Using points makes them harder. The more variables involved the less accurate they get, including the number of people making the estimate.

The way a business operates changes quickly when it starts to realize that everything about how the company operates is a system in and of itself, with every activity requiring inputs and outputs. Meetings are a great example. What preparation went into this? What are the time costs for the people to attend? What is the output from the meeting and was it worth it?

People rightly see many meetings, especially recurring meetings, as a waste of time and that is simply because the costs aren't being considered while the output typically isn't valuable. The "this could have been an email" trope arises from these collective frustrations. Shopify went as far as cancelling all recurring meetings of 3 or more people on their calendars!

So how much time are we spending on Story Points? What are the inputs? In many environments you'll have regular grooming sessions to plan ahead of upcoming work. The team will be pulled in a meeting for a few hours every couple of weeks to go through this process and the process will vary at many companies. Some will do it better than others and I have seen a lot of variety over the years.

Some will try to keep the process as fast and high level as possible, attempting to optimize for minimal meeting time. Which stories have we been asked to provide points for? Let's go through them one by one, discussing each story and then using planning poker to settle on an agreed upon point total for each one for the team. There may be some back and forth involved as the team members discuss their reasoning for something being rated higher or lower. Some details will come up, some valid points, some architectural concerns. Maybe a dependency will be identified? You identify some information that you need clarity on, so a Spike is created in your next sprint or iteration to research it to clear things up (which is good!).

Are these discussions being written down? In my experience the answer is usually "No" or "Rarely" for most teams. They'll settle on the point value and move onto the next thing because they want to get this over with to get back to work. Some will take it farther and include some implementation plans and notes, which are definitely warranted.

What happens when the team has turnover? What happens a few months down the line when this work comes back up based on the points that were given previously? Are they still accurate? Does the current team feel they have the same understanding? Do we need to go through the process again and re-score it? What happens when the scope changes? Are we allowed to change the points? Does anyone really remember the details that we talked about?

Is it possible to have some success with this approach? Sure. You need smaller teams, who communicate well and have worked together long enough to rely on each other while having enough experience with the system to have high level conversations knowing everyone is on the same page. Simple. Unless you have that, it's going to be turtles all the way down. There's no escaping it. Some companies like 37Signals have even had success with capping team sizes at two developers or Pivotal Labs using full time pair programming, which also works.

We invest a lot of time in this process of estimating with Story Points but the output is not particularly valuable beyond the immediate conversations that just took place, with the people who were present for it. I have personally seen teams spend over 30 minutes simply discussing whether a story was worth 3 points or 5 points, without writing down a single detail of their reasoning following the discussion!

Then the business will use these unreliable numbers to make all sorts of judgement calls from performance to projection. The numbers themselves were broken before you even started. You're running a race that you can't possibly win.

This is where the alternatives come in.

We already talked about estimating with T-shirt sizes to at least prevent the process of adding up story points and for some this approach actually works quite well. It diffuses most of the issues with velocity metrics by clearly indicating that it can't be added up, as well as preventing progress logging since you can't log a unit of XL. You're still probably going to run into the relative estimating problem across teams "This team finished 10 XL items while this team only finished 10 Mediums..." but to some degree that is unavoidable. You're still going to have the estimation accuracy issues of best case, worst case and realistic as well as the need to adjust as new information is discovered or scope changes. "Are you allowed to change the estimate after the fact?" is still an issue that remains in many organizations.

We know the business side of the house already wants to convert the estimate to time, so why don't we simply...estimate with time? You're going to run into similar issues here as with T-shirt sizes or story points. Did the team estimate the time? Who agreed to the estimate? Who is actually working the problem and do they have the knowledge necessary to complete it in that amount of time yet? The redeeming factor of estimating in time is that at least we're speaking the same language. That's a win.

The problems come when people hear, "This will take 2 weeks." Inevitably, this creates confusion because when a dev team estimates the time they often mean "This will take 2 weeks with our entire team if we have no interruptions once we start on the work next month after this other dependency has been resolved..." which isn't written into the criteria. Other work is going to come up on a live system. When you say 2 weeks to people on the business side of the house, they often hear "2 weeks" without any of the other ceremony. So when the result is two weeks worth of actual work after many other interruptions, waiting to hear back from other people while doing something else and the real timeline was closer to a month and a half it will create a perception that your estimates are bad, even if the two weeks worth of actual work was perfectly on target.

Time can work when you're small. That same single developer or pair of developers who have worked together for a while can probably agree on a timeline and hit it for you consistently. As the team grows, people move around, into or out of the organization and have different levels of experience it will vary dramatically just like every other type of estimate. As the estimate ages, when your team said "This feature will take 2 weeks" about 6 months ago, "2 weeks" might not be accurate anymore for any number of reasons from team changes to other work that was or was not delivered.

The end of the time tunnel is usually inevitable: meticulously logging time on every single item that you've worked on just to prove that you have indeed been working 40 hours a week even though that 2 week story took a month and a half. Sometime's its avoided. Other times it will be asked for to track progress, even though "spent 4 hours" doesn't given any indication of how close the work is to being completed. You could have spent 4 hours staring at the screen drinking coffee.

Estimating with points, time and numerous other approaches will all come with a lot of pitfalls. When you combine that with the amount of time typically invested in producing these estimates it can feel like a huge waste, especially when the output of that estimate becomes less accurate over time. Estimates are great when you know just about everything up front and can look at previous work to project how long something will take. In building construction for example, an architect will draw up plans, you know what the permitting process looks like and the time for assembly with parts is reasonably consistent.

In software development, you typically do not know everything up front. That's where Agile methodologies have their roots, accepting this reality. So if we know that we probably don't know everything up front, why are we trying to stick to estimates in those exact same methodologies?

That doesn't mean we can't project both time and cost. We can but you have to change your view of an estimate to something that naturally deals with all of the complications highlighted above. Things change. Teams change. Systems change. Dependencies change. Estimates change.

A few years back when I published Reality Driven Development on here, one of the comments from Hacker News pointed me in the direction of this book, The Principles of Product Development Flow: 2nd Generation Lean Product Development. It's not a light read but it includes a significant amount of math, psychology and economics of management that will prove why most of the problems that you see in an organization exist. It's an excellent read that parses through a mountain of science and I can't recommend it strongly enough.

Something not many people realize is that a significant amount of the Scaled Agile Framework (SAFe) is based on this book. I would estimate probably 70% and I am certified to teach it as a SAFe Practice Consultant (SPC). But SAFe itself retains many of the practices that lead to all of the problems described above. My assumption is that they do this to make it easier to sell SAFe to organizations who might already be using Scrum because it won't seem like quite as dramatic of a shift. There are a lot of wonderful things about SAFe by the way, but I found Donald first and Donald got it right.

"Queues are the root cause of economic waste in product development." - Donald Reinertsen

Reinertsen doesn't keep any of the broken parts and that starts with his approach to estimating and prioritizing, which he calls Managing Queues. Queue Length specifically.

This means that your focus is on the efficiency of the organization as a system and your goal is to ensure the flow of work is maximized. That's a fairly standard concept and there's nothing new here. You'll see the idea in plenty of methodologies like Kanban with its focus identifying and resolving bottlenecks.

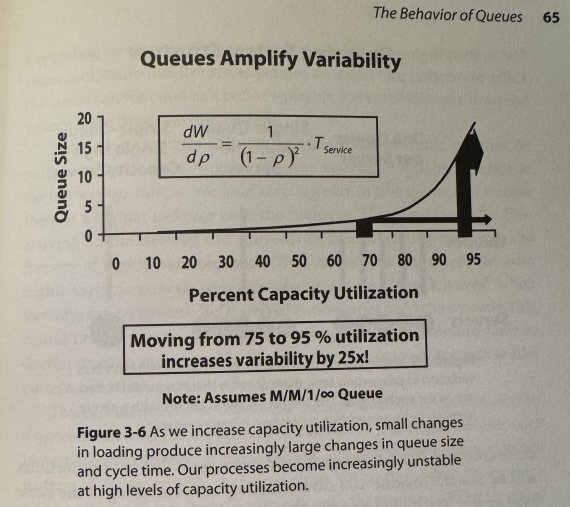

First off, a queue is simply a list of work to be done. A backlog of stories. A list of tasks. When discussing queues in Reinertsen's terms, we're focused two things: Queue Size and Capacity Utilization. The closer you move to 100% capacity utilization, the larger your queues will grow which will dramatically increase variability of all of your work.

This is the reason your estimates are always wrong. The moment you and your team are overloaded, things will continue getting worse. Queuing Theory has been around since 1909 thanks to its creator Agner Krarup Erlang (the same man the Erlang programming language is named after) and has been verified and replicated across numerous disciplines, including management science.

You must do some type of capacity planning and leave a capacity buffer to tolerate variability. That doesn't mean, we leave buffer time and then fill the buffer with meetings. It means we leave the buffer and fill it with nothing, otherwise it's not a buffer! In order to do capacity planning, we have to do some type of estimation and we have to track some type of historical progress to even know what the capacity is in the first place.

Here lies the issue. How do we estimate in a way that generates value for the time we put into the process, remains accurate over longer periods of time, allows us to track a rate of progress for purposes of capacity planning, deals with changes in scope from new feedback and avoids breakdowns in estimating patterns across different teams?

As a team, ensemble/mob programming style, you talk through the feature to break it down into tasks.

Allow me to explain why and how this works.

First, as a team does not mean, have somebody in a Senior Developer or Architecture role break it down for you and do a hand off. That robs everybody else on the team from the opportunity to discuss the problem, understand it, contribute to the solution and learn. As a team means "the whole team". If you have a 2 person team, this is no different than a Pair Programming approach. Mobbing or Ensemble programming is just pairing with a larger group where different team members take turns typing while everybody else discusses and advises.

We're doing the same thing without the actual code writing at this phase. It's exactly what you'd be doing during a Grooming session as you all talk through the problem to discuss the point value, except that rather than producing a meaningless number for each story you'll produce something closer to an implementation plan breaking the work down into tasks. You may need to create charts and diagrams as part of the process of the discussion, which is great!

As a team you will walk through the entire implementation, mapping out the flow every step of the way. What tests do we need? Are there database changes to be made? How does this tie into other parts of the system? Does this functionality already exist somewhere? Do we have knowledge about how each aspect of the system works? What should we do to validate the work? Should A/B testing be implemented as part of this design proposal? How do we go about implementing that? Do we need an additional library to achieve this and if so, which one? Do we have knowledge of that tool? How do we implement it? Does Bob want it to be done this way or that way? We should ask.

“In the presence of uncertainty, acquiring information is often the best way to generate value. And, yes, this is the point in this article where I tell you to read Donald Reinertsen’s Principles of Product Development Flow. ” - Dan Milstein

Your goal is to come out of this process with a known task list and an identification of Uncertainties, if any. Some uncertainties can be resolved quickly, during the session by having a couple of team members look into it right there during grooming. If it looks like any of these uncertainties cannot be resolved quickly and require more exploration, then document them and create exploratory/research work to get answers. Typically, these are called Spikes in most agile methodologies. You may have to build a prototype of part of the system. Once you have eliminated the uncertainties, come back to grooming and finish building the task list with your new information. The moment that you have a mapped out feature with no remaining visible uncertainties, you have a more reliable means of estimation in your hands.

I remember having a home inspection completed before buying a house and the inspector identified a few areas where he didn't include many details, but said "You may want to have a plumber, HVAC or electrician look at these." I didn't think any of them were a big deal, so we went ahead and moved in thinking all the house needed was some fresh paint and refinished floors. Then we had those "other" things checked out and each one was a big enough problem that I had to take out a home equity loan just to repair them. The inspector identified uncertainties, but I didn't explore them before moving forward and the result was a renovation cost 4 times higher than what I expected to pay.

"1 hour of planning can save you 1000 hours of coding. 1 hour of coding can save your 1000 hours of planning. There is no science to figuring out which case you’re in. Only art." - Robert Roskam

The output that you will have from this process comes in several forms. First, your team will have gained more experience talking through technology problems with each other which works as a natural team building and trust exercise. Everyone who participated in the process will have a deep understanding of what needs to be done to complete the feature. If a different team were to look at this later, they'll understand exactly what the expectations around implementation are while having a clear picture of the anticipated scope of work. You make an effort to break it down into the smallest reasonable steps. This also aligns with the XP practice of Getting Small Stories, essentially forcing the practice through the small task breakdown.

And critically, you're going to be left with a list of tasks. A list of tasks is...a queue. You can take these tasks and group them into sections, stories or issues that can be assignable to different people or parts of the team to be worked in parallel.

What we care about is the total number of tasks. The total number of tasks gives us our queue size, which provides us with what we will call the job size.

So how to we turn that job size in to some type of projection? We replace our Story Point velocity with an Average Task Rate. Exactly the same idea, except we have replaced an unreliable number in Story Points with a significantly more reliable number of small broken down tasks after removing uncertainties.

Now, if you're paying attention and reading this with a critical eye something in your brain just told you "Wait, this is just waterfall! We can't know everything up front!"

You can't know everything up front. 100% correct. We know that even after breaking this down and making the best plan that we can within reason, we will still get new information. We will still receive feedback as work progresses that may change our course from the initial plan. There's no such thing as "scope creep" in Agile. Reacting to fast feedback is literally the point.

With this approach...you update the tasks with the new information. Your team can mob around the new information and add new tasks and remove tasks that no longer apply. Plus, you will document exactly when, why and how this change in scope happened which has a side effect of live updating your entire projection as the project progresses.

Feature B is now 80 tasks instead of 50. When anyone not directly involved with the project asks why it's taking longer than they thought, the answer will be spelled out in the tasks list. These changes were added on these dates, for these reasons based on this feedback from these people. There is no "your estimate was wrong" situation. There is no "re-estimating" process. There's not even an ask to approve if you can change the point value. It just happens.

Progress is naturally tracked as tasks are checked off the list while work completes. You don't have to pretend that logging an hour or a point is a useful measure of progress anymore. As other work appears, interrupts and takes precedence at times your team will document the tasks involved in that work too.

If the team's task rate suffers, you can identify some type of problem may have been introduced. As your processes, tooling and automation improve the task rate should increase providing a meaningful performance indicator that brings value to your team.

What's more, nobody ever had to ask your team for an estimate. Not a single developer was asked how long they thought something would take or how complex something was supposed to be. There is no psychological pressure to say, "Yes, we can get it by that time!" Your product team can see that Feature A is 250 tasks, Feature B is 50 tasks and that your team completes tasks at an average rate of 40 tasks per week to project a reasonable completion time on their own. Feature A would probably take a little over 6 weeks. Feature B would probably take a little over 1 week.

You get more accurate projections and estimates because you stopped estimating. As information changes, the estimate naturally adjusts.

Compare this with a story pointing exercise. The team gathers around a story and discusses the problem, settles on a number after a while. There's no intellectual requirement to participate in order to provide a number. You can just vote in the middle or try to match what other team members are suggesting for the value. Anyone can coast through a story pointing meeting with minimal engagement. You've been in these meetings, especially remote where the cameras stay off and only a couple of team members are talking. Can it be done better? Sure. But it's very easy to do poorly.

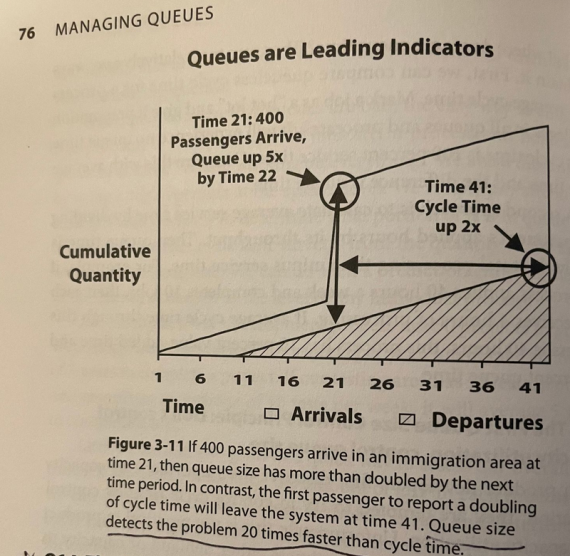

Average Task Rate, Cycle Time and Velocity are all trailing indicators. A problem must already exist in order for these metrics to let you know about it. Queues, on the other hand, are a leading indicator. If the queue size grows you can see it coming well in advance.

What if after starting work on Feature A, that was 250 tasks, we had a couple of rounds of feedback and the total task size had grown to 500 tasks? If you're measuring your queues you can see that this feature is much larger than expected and potentially re-evaluate whether work should continue.

If we get feedback on an existing Story, do the points get adjusted at all? Do we just keep plugging along as if nothing has changed in a continual effort to deliver? If we don't adjust the points, our velocity will drop giving the appearance that the team is just doing less work. But because velocity is an average, we won't really see the drop until farther down the road. If we adjust the points then the velocity will maintain its rate but the shift in points might not provide an accurate indicator of just how dramatically the scope has shifted. We couldn't change direction because we missed the road signs.

In the example from the book above see what essentially amounts to a spike in the arrival rate to the queue. This applies in all queues, not just the system defined above. Little's Law states that the long-term average number of items in a stationary system (waiting in the queue) is equal to the long-term average effective arrival rate, multiplied by the average time that an item spends in the systems. The result applies to all systems and even subsystems.

In a product development scenario, we have the ability to control the arrival rate of our system based on the amount of work we place into the queue at any given time. This is one of the primary reasons to avoid having an endless backlog and instead to focus on smaller batches at a time, typically over a 6-12 week time period. By controlling the arrival rate while providing buffer, we can prevent the system from being overloaded. In a scenario such as Feature A growing from 250 to 500 tasks, we may re-evaluate whether everything involved is necessary or whether it could be broken into smaller, releasable parts for example.

Multiple queues feeding into the same system, however, can permanently congest the system if you don't have a means of controlling the flow rate. Projects have a beginning and an end. Products do not. A new system that has no customers, no feedback and hasn't launched yet will have an extremely predictable and rapid rate of work flowing through the queue from your team. The moment that system goes live with real customers a new queue is created...the support queue. Also called Unplanned Work according to The Phoenix Project, which is a must read. The support queue has a flow rate that is outside of your internal control. If work from the support queue will also feed into the same feature development team, it can use up their planned buffer, overload the system and cause variability spikes to cascade throughout.

There is one way to control the flow rate from the support queue: quality control and rapid response to support issues. Higher quality control is designed to reduce the output of issues which could potentially trigger support requests. Rapid response allows you to immediately deal with any issues that quality control didn't catch but your customers did. Address customer issues as your first priority, find and resolve the root causes, improve your quality control process to ensure that it would catch similar issues in the future before your customers do. Some organizations will have a dedicated production support team, designed to absorb the influx from the support queue which can work, but in order to make that work this team much also have the authority to institute preventative measures to create influence on long term quality control.

If you do that, you will reduce or stop the flow rate from the support queue and allow your team to focus on maximizing the flow of new features long term. If you choose to ignore or downplay the support queue to focus on shipping new features, the result will be a perpetually clogged system of developers that is always behind schedule. It's a mathematical certainty.

So here is the answer to our early question: How do we estimate in a way that generates value for the time we put into the process, remains accurate over longer periods of time, allows us to track a rate of progress for purposes of capacity planning, deals with changes in scope from new feedback and avoids breakdowns in estimating patterns across different teams?

We focus on understanding the problem as a team, documenting the work required and eliminating our uncertainties by breaking the work down into queues of tasks. We adjust the tasks as we receive new information or scope changes, track average task rates for the teams for projection and capacity planning without having to provide estimates at all. All of our time spent planning produces output that is both useful and reliable. Even months or years later, the task plan can be reviewed and adjusted if certain tasks are no longer required based on changes in the system during that time. As a bonus, we gain better accountability and agility by utilizing a reliable leading indicator to adjust our decisions early in the process.

Now we can more reliably prioritize our future plans based on the expected Return on Investment using the Queue Size (Job Size) as a stand in for the anticipated cost. To refine this even further, we can use a process Reinertsen calls Weighted Shortest Job First (WSJF), but that's another post for another day.

Story points are pointless, unreliable and designed to fail from their very conception. Instead spend your time on something meaningful, valuable and reliable; measure queues.

// Share.on([hacker news,

linkedin,

twitter,

facebook,

reddit])